It's been two weeks since DevLearn 2017, and I'm still collecting my thoughts.

To get things going, here's a recap of the session I facilitated, number 715 on the agenda: "BYOL: Building an Adaptive Course in Storyline".

The four objectives of the session were to learn how to:

- customize course content based on pretest results

- show remedial content only when needed

- end the final test early—as soon as the learner passes or fails

- make your course adjust itself based on learner performance

Are you sitting comfortably? Then we'll begin.

First off, I had a blast doing this session and I'm very grateful to the eLearning Guild and my employer for the opportunity to speak and even more so to the brave folks who attended, despite it being in the first slot on the last day (and up against sessions from some big-name speakers).

The files

If you want to follow along, feel free (feel encouraged!) to grab the files.

There are six files there, but you'll only need two. Just pick one from each group:

The template (which has all the goodness baked in already):

- DL17_715_Blair_SL1Template.story (the Storyline 1 version)

- DL17_715_Blair_SL2Template.story (the Storyline 2 version)

- DL17_715_Blair_SL360Template.story (the Storyline 360 version)

- DL17_715_Blair_SL1WorkingFile.story (the Storyline 1 version)

- DL17_715_Blair_SL2WorkingFile.story (the Storyline 2 version)

- DL17_715_Blair_SL360WorkingFile.story (the Storyline 360 version)

Feature 1: Customize course content based on pretest results

We need two things to make this work, variables and triggers.

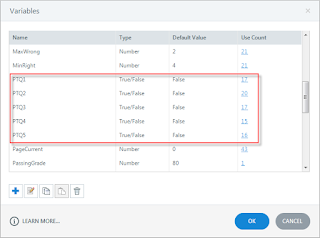

First up, we need to track how each pretest question was answered. I used a Boolean variable for each question (e.g. PTQ1 for pretest question 1). If the learner got the question right, we'll set it to true.

Once that's done, you can use that variable in triggers that control your course's branching. For example, I have a branch at page 3 in the first three chapters of the template. If the learner correctly answered the associated pretest question, they'll continue to page 4. If they didn't, they'll proceed to a bonus slide before continuing to page 4.

Feature 2: Customize course content based on in-course performance

This is essentially the same thing, only we're using a checkpoint question in the chapter instead of a pretest question.

First up, we need a checkpoint question. I have one of those on page 3 in the fourth and fifth chapters of the template. If the learner correctly answers the pretest question, they'll continue to page 4. If they don't, they'll proceed to a bonus slide before continuing to page 4. The triggers that handle that are on the "incorrect" feedback layer for the checkpoint question.

Feature 3: End the final test early

This is another "triggers and variables" solution.

We've got a few variables:

We've got a few variables:

- CountRight: tracks how many questions the learner answers correctly

- CountWrong: tracks how many questions the learner answers incorrectly

- MinRight: the threshold at which we'll end the test if the learner's doing well

- MaxWrong: how many questions the learner can get wrong before we end the test

With those variables in place, there are a few things we'll do with our triggers:

- Increment CountRight and CountWrong, when the learner lands on a "correct" or "incorrect" feedback layer

- Jump to the final results screen if either count meets the associated threshold

This is a bonus feature in the template. It takes a little more work than any of the previous ones, but in the right situation it's definitely worth the effort.

You'll need the same tracking variables from feature 1 (i.e. PTQ1 and so on).

To make things a little easier on myself (I'm not lazy, I'm "energy efficient"), I added shapes to the final test questions. I hid them off-slide, at the top. They have triggers on them to do the following, if the associated pretest question was correctly answered:

To make things a little easier on myself (I'm not lazy, I'm "energy efficient"), I added shapes to the final test questions. I hid them off-slide, at the top. They have triggers on them to do the following, if the associated pretest question was correctly answered:

- Answer the question correctly (e.g. final test question 1 is "true").

- Submit the interaction

- Increment the page number

- Increment CountRight

- Jump to the next question

Your turn

What's your favourite feature here? How are you planning to use it?

Can you think of something I should add?

Chime in, I'd love to hear from you!

No comments:

Post a Comment